Auto logout in seconds.

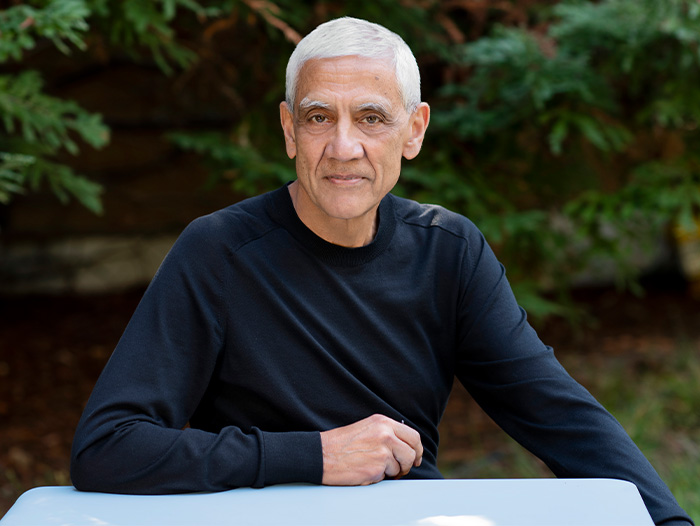

Continue LogoutEric: Vinod, for years as a technologist — I might even say as an anthropologist — you’ve been predicting how AI will upend and reshape entire economies, societies, maybe even civilization itself. To paraphrase some recent commentary you made at MIT, AI isn’t a coming tidal wave — it’s a tsunami like nothing we’ve seen in our lifetimes. I’m eager to get into our healthcare-specific discussion, but before we do, let’s start at a broader societal level. You and I both believe that GenAI is in the pantheon of the 20 to 25 most civilization-altering technologies in history, alongside technologies like the printing press, steam engine, and the internet. As a society, are we ready?

Khosla: Eric, you mentioned the printing press. What did it allow us to do? It allowed us to convey knowledge at an unforeseen scale. After its advent, you didn't have to sit in the same room as somebody who had knowledge to acquire that information. Sure, there were handwritten transcripts, but that didn't scale. The printing press changed all that. Moving to the motor, whether the steam engine or the electric motor, fundamentally multiplied human capabilities. You could do more work than a single human ever could and amplify individual human capability. To get to your specific question, what’s different this time is that AI amplifies the human brain — and maybe exceeds it. This is the whole AGI (artificial general intelligence) and sentient AI debate, which we can get into.

If AI is as powerful as we’re seeing — amplifying the human brain — you're now in a new domain where you can do much more than humans can, hopefully for human benefit. At that point, much of what we economically value in society will become essentially free, except for what I call “humanness.” A primary care physician: free. A tutor for your kids: free. So, whether it's structural engineers, oncologists, primary care doctors, or tutors, expertise essentially becomes free. You can analogize it to having a friend/colleague who is a Ph.D. in everything, who is also infinitely patient. You can ask them whatever questions you want. They aren’t always correct, but they know far more than anyone else in terms of depth and breadth, and they have innumerable ideas. And if that's the case, perfect information recall and synthesis is no longer a human responsibility. This emerging suite of technologies frees up humans and empowers them to do more.

Now, some people immediately point out that this will displace jobs, but from my point of view, it enables humans to do so many more things. I'll give you a very personal example. My daughter got married in May and I had to give the father-of-the-bride speech. I wrote my thoughts down, capturing what I wanted to convey. I then opened ChatGPT and prompted it to “write me rap lyrics from the following thoughts.” And then I took the rap lyrics and fed it to one of our startups generating AI music, called Splash.ai (linked here), and it sang the rap for me. So, in front of 300 people, I was able to rap with some precision and emotional heft. Keep in mind, I'm like in the bottom 1% of musical talent. But my point is that such a process will happen in every domain: lowered barriers to entry, democratized ability to upskill quickly, and new ways to apply innovative and external ideas to existing subject areas.

Consider another example: Curai Health, which creates and scales a primary care physician’s expertise. They recently had a patient come in and just chat with the AI. The patient shared that they just came back from Burning Man. “I have a respiratory infection.” The AI connected the fact that Burning Man takes place in the desert, and there's a rare fungus in the desert that your average physician wouldn't know about unless they were local to that region. Because of this incidental comment about coming back from Burning Man, combined with the complaint of a respiratory infection, the AI was able to connect the dots and the patient got a diagnosis much faster. Had they gone to their regular human PCP, it’s highly likely they would have been misdiagnosed a few times. The range of things AI does when it expands the human mind —from letting me rap to detecting the cause of a rare fungal infection — only starts to scratch the surface on what’s possible, both from the clinician’s side and, maybe more importantly, from the patient’s side.

Eric: Your construct, Vinod, resonates. The motor mechanized human brawn. And now, GenAI amplifies human cognitive capacity. But if you look retrospectively, many of these transformative technologies, especially the ones that automated manual labor, all supplanted routine, non-cognitive, non-analytic tasks. And what's different about GenAI is it’s coming for the top of the occupational pyramid: knowledge workers. It is augmenting, and then maybe even obsoleting, a lot of what high-paid, high-status professionals do —the non-routine, cognitive and analytic work. It’s the inverse of past automations. These are the accountants, consultants, lawyers, and yes, doctors. If that’s correct, that is going to be a hugely disruptive development — one that may radically reshape our economy and society.

Khosla: Yes, at a very fundamental level. I did an interview with Laura Holson of the New York Times in the year 2000. At that time, I only knew enough to say, “If AI manifests something like the way I expect, we will have to redefine what it means to be a human.”

It was clear to me even then what was going to happen with AI, but I was less sure of when.

To your point on changing the economy, I do expect to see great GDP and productivity growth. Everything economists measure will grow and be easier to accomplish. Unfortunately, I also foresee increasing income disparity. And I worry about that a lot. The good news is, if I'm right, this is hugely disinflationary. Do you need a high income if your doctors and education are free or cost one dollar a month? I've been recently arguing that the entities setting up software infrastructure for governments set up a national ID system and digital infrastructure for payments. I’m telling them that they should include free primary care, free chronic care, free tutors, and free legal services in their digital infrastructure because it's far cheaper and better than the very poor level of service they're offering right now.

If we imagine this world, the next question becomes: What about the activities that still require physical labor and impact the cost of goods? I think in the next 25 years we'll have a billion bipedal robots, and they'll do more work than all humans can do today. So, goods will be nearly free. The labor part of the cost of goods production will disappear.

It’s hard to predict precisely, of course, but directionally, I'm certain I'm right. On the specifics: I'm certain I'm wrong.

From Keynes to Khosla: Why this may turn out to be more utopia than dystopia

Eric: So an AI-enabled future may have all sorts of economic dislocations — not just unemployment, but disemployment — the obsolescence of a lot of professions. But the marginal cost of goods and services will essentially go to zero, providing enough wealth that societies can provide a universal basic income for all.

What you’re predicting makes me think of a 1930 piece by John Maynard Keynes called “The economic possibilities of our grandchildren.” Keynes looked at all the mechanization that happened in the 1920s and projected that within 100 years — so we’re talking 2030, not too far away from the time you’re predicting — we will have become so productive as a society that the very idea of ‘work’ will become an anachronism. Keynes said we’d need to work 15 hours a week, and that purely for the spiritual fulfillment and purpose of it.

What the two of you are saying — I’ll call it ‘from Keynes to Khosla’ — is that we’re going to enter a ‘post-scarcity’ society, and our basic human relationship with work will have to be redefined.

Khosla: Yes, it will. I believe sometime in the next couple of decades, society will be capable of —and I use the word ‘capable’ carefully — eliminating 80% of 80% of all jobs. So that's 64% of all jobs in the next 20 years. That has implications for a kid born today or young people going to college today. Of course, this is just a guesstimate given all the uncertainty around how our society will respond and either embrace or run from the changes.

That's a massive structural shift, deflationary for sure. But also, I think the need for work will disappear, as you pointed out Eric. I agree that human motivation is key to societal progress, but I don't think that motivation for progress is tied to work. It's tied to work for some people. But if you're an assembly line worker and you assemble the same widget for eight hours a day for 30 years in a row, my guess is that’s not exactly the work you want to do. So first, that kind of work will disappear.

The flipside is that people will get to pursue the work that feeds their passion. Consider that today, not everyone can be a musician. But we have a music AI, and there are 13-year-old kids on Roblox who have done literally thousands of concerts themselves. Why? Because the music AI makes every one of these kids feel like they're a rockstar. And they hold concerts so others can come watch them play music.

So, the need for work will disappear and people will be empowered to follow their passions. Right now, you can be an artist, but if you choose that career, you've chosen a life of struggle — or at least a very low probability of high remuneration. After all, not everyone can be Beyoncé. But I think economic abundance will make a career in music, if one chooses, satisfying and achievable. If we take GDP growth from 2% to 4% in this country — and I believe this is very possible with the productivity gains we’re projecting via AI application — we will probably double or triple per-capita income over 50 years. Just by going from 2% to 4% GDP growth annually.

If that’s the case, there’s going to be enough to share.

Eric: That, of course, becomes a societal redistribution question.

Khosla: Yes. It’s a hard redistribution problem. It's a social question. Society will have the ability to say, “We will redistribute this abundance.” Some societies will do so, and others will say, “We have to stop this technology because it's replacing jobs.” That's a choice society will make. I'm convinced China will force these applications forward, even if they have to use Tiananmen Square-type tactics.

Then there’s the question of how fast this happens. The democratic process doesn't love radical change, but we've gone through this kind of change before. In the year 1900, most employment in the United States was agriculture. By 1970, it was about 4%. So, we've gone through this radical shift before, but we did it over three generations. This time it may be one generation, and that poses a unique set of adjustment challenges.

But equally, the very technology that causes this dislocation can also be the salve. Our methods of communication will be better. Our ability to tailor and teach will be exponentially stronger. Solutions like personal tutors will be ubiquitous, agile, and low-cost. We can afford a personal tutor for every person. We will have the tools to adapt. But I can’t predict what will slow this down, what will happen in which country or which part of the world. But this is a fabulous opportunity to put humanity in a very different place.

Eric: Here’s one last societal, even philosophical, question before we turn to healthcare. You seem to have an optimistic vision of this abundant, ‘post-scarcity’ society. It's interesting that Keynes’ view was less optimistic. He said that humanity would have a hard time adjusting to a world without the struggle for survival. He pointed to the indolence of British aristocracy as a less-than-edifying example of what might happen when people found themselves with nothing but leisure. Is abundance going to lead to decadence, or is abundance going to lead to some kind of utopia?

Khosla: I think this is fundamentally a question of “at what age do you get to realize you have the freedom to pursue your own passions?”

If, at age six, you start teaching kids that they should be thinking about how to use their time to stoke their passions — instead of instilling in them that they have to do well at school because one day they will need a job — that’s a very different set of formative years for the human brain than if you instigate that conversation with someone at age 40.

My conception at age 68 is that I have a 25-year plan of what I want to work on because I'm free to do what I want. I take my freedom and say I can, and still want to work 80 hours a week. And, of course, I read incessantly. I have a passion for learning, and I pursue it. There's infinite possibility if we train five-year-olds to think in this way.

I gave a talk at Stanford Business School in 2015 and discussed this fact, and I tweeted it again just recently. Most people are driven by what's expected of them — and by their neighbors. But the world I’m striving for, the one that we can unlock with these technologies, is one where real happiness comes from being internally driven, i.e., by your passions.

How disruptive might this AI paradigm shift prove to US healthcare?

Eric: Vinod, you mentioned America’s evolution from an agrarian to an industrial economy, and with it the collapse of U.S. agricultural employment from 50% to 4%. But this took three generations to play out—society had lots of time to adapt. Let’s analogize this to what may happen in the U.S. physician community with GenAI.

Doctors are the most respected profession in the United States, followed by nurses, firefighters, and members of the military. They are also justly well-paid. In fact, nine of the top 10 highest-compensated occupations in the country are medical. But what we’re discussing is a technology that may disrupt knowledge workers across a multiplicity of areas. I can see good and bad dimensions to this for doctors. We’ll certainly see administrative simplification and automation/elimination of low-value activities, perhaps solving physician scarcity and geographic maldistribution issues, and that’s great.

But what happens if and when we see foundation models evolve into a multimodal medical ‘superintelligence’—synthesizing exabytes of data from EHRs, medical images, biomedical literature, clinical trials, pharmacogenomics, bioinformatics, social determinants, etc.? What happens when the ‘hallucination’ issues with large language models are fixed, and we can rely more fully on these encyclopedic insight engines for diagnostics? Will the technology replace or even obsolete much of what physician judgment is today?

Khosla: Your assumptions could be right, Eric, but I don’t think they have to be right.

AI will put deflationary pressures on the cost of medical expertise (by $200-300 billion per year) and enable doctors to practice the uniquely human aspects of medicine, along with providing highly specialized procedures and consults that can’t yet be done by AI.

I do think broad primary care (well beyond what vendors like Oak Street are doing today) and mental healthcare will be heavily influenced by AI, if we choose to adopt these technologies. AI primary care and AI mental healthcare, as companies like Curai Health and Limbic.ai are pursuing, will cost less than $10 per person for near-unlimited use and will offer much higher quality, accessibility, and breadth of care, including preventing and managing chronic diseases through frequent touchpoints — which is currently a very large part of healthcare spend.

Already, Limbic is being used to do patient intake at 33% of all NHS mental health clinics in the U.K. Limbic Access can classify the eight common mental health disorders treated by NHS Talking Therapies (IAPTs) with an accuracy of 93%, further supporting therapists and augmenting the human-led clinical assessment. In a study of 64,862 patients, Limbic showed that using this AI solution improves clinical efficiency by reducing the time clinicians spend on mental health assessments. They found improved outcomes for patients using the AI solution in several key metrics, such as reduced wait times, reduced dropout rates, improved allocation to appropriate treatment pathways, and, most importantly, improved recovery rates.

But with all of this, physicians must be brought along. Conversations about compensation and new payment models to reward this new kind of work are key. In the negative sequence of events, society may be capable of adapting AI — but it won't because physicians resist it. If you want to bring the doctors along, you must protect their income. I've spent a fair amount of time talking to Jim Madara, who's the president of the American Medical Association (AMA). If we adjust billing codes, we can make sure that the physician’s income doesn't get disrupted while they adopt AI today.

First, let's look at the work of the physician. 30% of their time is spent on administrative tasks. Jim told me that since the pandemic, 20% of all primary care and specialty physicians in the country have declared they want to retire in the next five years. And we see that also at Massachusetts General Hospital. They’re simply not taking new patients for primary care, because they aren’t able to hire enough physicians, which is sad. It’s unfortunate that’s happening in a metro area like Boston, in the middle of the developed world, in one of the global epicenters of medicine!

But if you buy my back-of-the-envelope calculation that 80% of what physicians do could be done by AI, then you start to solve many of their problems. A physician will be able to double their patient panel within the next three years, and quintuple it in four years. For the sake of argument, let’s say in the next five years, physicians double their patient panels.

The even better news is that many of the tasks AI will do well are tasks physicians don’t enjoy. For example, AI can eliminate the 30% of their time they spend doing documentation; AI scribing is already starting to work on this. Then there is the massive amount of data generation that is continuing to grow. Physicians don’t need to look at non-informative routine information — their attention should be on the surprises, the edge cases, and on having AI guide their attention.

Care should be a collaboration between a patient’s goals and their physicians. Freeing up physicians to spend time exploring options to match patient goals (and making sure the patients get the right information they need) is massively facilitated by AI. This dynamic is also empowering for the patient. The increased availability of information and advice allows the patient to be the CEO of their own health and health choices. Geographic maldistribution goes away, as you point out Eric, but the physician gets the same income for handling twice the number of patients — and they have many more encounters.

Eric: We know this is especially important, for example, when managing chronic conditions.

Khosla: Right. In the U.S., the average number of primary care visits per patient is one-fourth or one-fifth of what it is in Australia. Think about it – we could have five times the number of patient interactions. And that’s great news for clinical quality and outcomes because we know that frequency of interaction is positively correlated with patient health.

Primary care includes chronic care for diabetes, hypertension, etc. To do that well, Curai talks about having 100 touch points for every patient, every 100 days. Curai has examples of dropping somebody's blood pressure by 30 points just by frequent interactions, or better behavioral health. This is much more fun and much more fulfilling for the physician, and of course there’s the added benefit of not having to spend their time on administrative work. We’d leverage AI to help us achieve this.

Eric, I'll give you one more fun case of what this could look like. We have a little company in the U.K. called Tortus. They're doing the following: A physician only talks to their AI. The AI then talks to Epic. So, the physician never touches a keyboard. They can dictate notes, and it shows up in Epic within five seconds. In one fell swoop, you eliminate the single largest source of frustration for physicians and add 30% capacity to redeploy to direct patient care.

If I were training to be a doctor today, I’d assume some significant flattening of pay disparities. Specialist consults for things like dermatology, cardiology, neurology, or endocrinology may be less necessary because the primary care doctor will provide more of the integration and human element of care with advice from AI specialists. The specialist’s value add today is focusing on an esoteric knowledge base and having a specialized pattern recognition system that they try to keep up to date, which AI is better at doing than humans.

It has already been demonstrated in many advanced care models that one of the best ways to reduce healthcare costs and improve patient outcomes and satisfaction is the integration of “curbside” specialist consults into routine primary care. Here, we’re getting the specialized answer the primary care provider and patient wants and needs immediately. AI will make this available to all primary care providers at effectively zero marginal cost.

Eric: You mentioned a number of specialties, but I noticed you didn’t mention oncology.

Khosla: Oncology is an interesting case because a lot of the challenges around treating cancer patients involves the integration of huge amounts of knowledge from different domains — information that is changing constantly due to the latest research and findings. Patients go to comprehensive care centers to receive integrated views from subspecialized oncologists, oncologic surgeons, radiologists, radiation oncologists, specially trained pathologists, and other domain experts. These specialists need to integrate current research knowledge with their own experience (i.e., summarizing past patient trajectories), and make projections based on a single patient, their medical history, their imaging data, and their genomics and proteomics data. Each patient needs a framework for guiding their decisions about what care they should receive based on their particular goals.

Imagine each patient, anywhere, assembling their own tumor board without having to visit one of the specialty treatment centers for the best care. This will be especially powerful in small towns and villages in countries like India, where no oncologists are available. This is obviously such a fertile ground for the use of AI tools, and we have yet to see someone really doing it the right way, but it will happen.

Eric: This could revolutionize our PCP situation in the US. We have something like 225,000 PCPs in the country, the same demographics as other specialties, with roughly a third over age 65. And yes, we have a persistent shortage, with major accessibility issues. But what you’re describing Vinod, especially the administrative simplification fixes, would alleviate this.

But the more profound, enduring GenAI impacts may alter the very practice and philosophy of medicine itself. If doctors have access to a future encyclopedic, recursively self-improving, multimodal GenAI ‘copilot’—with instantaneous access to up-to-the-minute medical, pharmacological, and behavioral insights to guide their diagnostic and treatment decisions, doesn’t that change the nature of the work itself? Memorization and quantitative skills, which are in large part what we screen and select for in medical school admissions, become suddenly a lot less important.

Khosla: I think you’re right. I told Jeff Flier, when he was Dean of Harvard Medical School five years ago, they ought to use criteria other than IQ in admissions. I told him that if a student has to be a super high-IQ nerd to get the grades to gain admission into Harvard or Stanford Medical School, then they’re not going to care about the human element of care. I literally told him to screen for empathy (putting yourself in somebody else’s shoes) as a key criterion for doctors. I suggested he look for admissions criteria at the UCLA Film School. He didn't take me seriously, but I do believe that.

But I think in part, Eric, you’re asking about the transition as much as the nature of the job of the physician. I think we have enough flex in the system to shift gently over a longer period. We always talk about reducing healthcare spend, but we don't talk about at whose expense. Who do we not pay? It's a reality of healthcare nobody wants to upend. But the reality is that someone’s cost is someone else’s profit.

With AI, we can ask what the best service is for the need at hand. And in many cases, what the patient wants, and what is clinically necessary, isn’t the most expensive service. In fact, over time, we can provide recurrent service at the same price point, especially as technology improves. No payer will mind you providing more services at the same expense.

We can increase the quality of care and the frequency and number of touch points while keeping the premium dollar and overall healthcare spend the same. We can also keep people relatively comfortable in their place during the transition. The only people who lose are those who don't adapt.

AI will, of course, make expertise much less expensive, from primary care physicians to oncologists and endocrinologists, but it will also expand capabilities of imaging and diagnostics tests. There are several examples of this. With AI, we can replicate the work of a cardiac MRI technician through self-driving software for MRI machines, like Vista has done, reducing the duration of a cardiac MRI exam to less than 20 minutes in the scanner. This decreases cost, increases throughput, and allows for safe utilization of cardiac MRI protocols in areas where no specialized technician is available.

Caption Health developed the self-driving for ultrasound, allowing for a cardiac ultrasound technician to be trained in days. An Uber driver could be trained easily to do an FDA-approved at-home cardiac ultrasound. Genalyte hopes that every patient coming in for a doctor's visit will get a single, low-cost test in the ten minutes before their appointment that quantifies 85% of all biomarkers a primary care physician might ask for. It will be cheap enough to reduce the cost of any test the primary care physician orders while enabling same-visit test results for patients, which should eliminate the need for follow-up visits dedicated to discussing test results. In addition, adverse drug interactions will be reduced without needing more clinical pharmacists by leveraging AI to monitor all prescriptions and patient records and personalizing drug dosing based on a person’s genetics, transcriptomic and proteomics snapshots, and other factors, at a near-free cost of expertise.

Eric: Your ‘natural selection’ comment resonates. Those who adapt should be fine in this transition; those who don’t are vulnerable. So far in our conversation we’ve only discussed clinicians. Let’s turn to the administrative side of the equation.

Historically, healthcare hasn’t been great at embracing productivity-enhancing technologies. Technology in most industries is deflationary, as you pointed out. In U.S. healthcare, it has proven the opposite — healthcare is the only U.S. industry that has seen negative productivity growth over the past generation. And a lot of this is because of administrative friction, which costs an estimated $1 trillion annually. Instead of deploying technology to fix this administrative complexity, we’ve traditionally added people. And now U.S. healthcare employs some 18 million Americans. 450,000, by some estimates, are administrators – an increase of 3200% over the past 50 years.

What I’m hearing you say, Vinod, is that these are the kinds of occupations that can be hugely disrupted by GenAI. What happens to them in this brave new world?

Khosla: They should be worried.

Eric: Already, some estimates point to as much as $450 billion in U.S. administrative costs that could be theoretically eliminated by GenAI. While this is speculative, it makes intuitive sense, which brings us back to your point that someone’s waste is some else’s profit. I suspect that’s an element of your theory on changing physician reimbursement codes —create savings from eliminating admin waste and redirect to subsidizing this transition you’re describing for clinicians?

Khosla: Exactly. That’s why I'm meeting with the AMA to tell them to change their billing codes to make this transition happen.

Here’s a simple example. Let’s say an AI does a patient intake and completes a diagnosis and prescription. You can clearly have a physician, even a retired physician, take two hours a day to review and agree or disagree with those summary findings. If they want, they can ask some additional questions. All of that helps train the AI. It also keeps the physician engaged, and they can bill for it. And these people who are over 60 or 65 and want to retire can retire in Hawaii and do this job remotely.

We'll increase physician capacity — fix the shortage, as you point out — and if they are supervising the AI and getting paid for it, doctors won’t fight it. Economic incentives are key, as we all know from human behavior.

Now, let's say you had a physician visit. The doctor is too busy to call you tomorrow or even three days later to check and ask how things are going. But the AI could talk to the patient every day and then determine, as an example, if we need to change the dosage. The physician could then intervene three days later or approve it and get paid. So, you could dramatically increase the number of patient interactions. You could do that with AI — and at a lower cost — if you set the billing system up correctly.

The overall message I’m communicating is that I think for the next two decades, the increase in care frequency and AI oversight by physicians will absorb the physician capacity of those physicians who don't want to retire, as well as offer extra capacity from “AI assisted” physicians to improve care at a constant cost. It just needs an adjustment of the billing codes to protect the physician when they use AI.

The next big leap in hyper-personalized care

Eric: Most of our healthcare discussion has focused on the supply side (provider). Which makes sense, given our reimbursement dynamics. But of course, AI is also changing the dynamics on the demand side (patient), especially in how it may directly empower individuals to provide either self-care or self-monitoring around chronic conditions. In some cases, patients are willing to pay out-of-pocket for these services. A lot of the innovation in this space predates foundation models and LLMs, including earlier generations of AI categories like machine learning, robotic process automation, and computer vision. How are you seeing this play out? I know a lot of your investment strategy and capital deployment goes into this space.

Khosla: Yes, that’s true. Let me give you a couple of examples because it’s helpful to be specific.

AliveCor sells a $99 electrocardiogram (ECG) device—the KardiaMobile Card. Do you know how often these devices are used? Some people say that individuals don't pay for healthcare, but there are 250,000 cardiac patients that pay $10 a month out of pocket because there's no reimbursement for what’s being offered. They choose to self-pay because the service is important to them, they might die otherwise, or because they may have to rush to the emergency room. The device itself is standard credit card thickness and gives you a diagnosis on your phone. Within the next few months, 20 or so medical cardiac conditions will be diagnosed by that little device. We have proof that says this detects more atrial fibrillation than a Holter monitor. Think about the implications that easily accessible, affordable measurement and care has on compliance, frequent monitoring, early detection, and close management of patients.

Consider Sword Health, which does physical therapy remotely with computer vision. We found 50% of patients use the technology on Christmas Day — that’s how committed patients are to the opportunity to easily access care. And it has wide-ranging applications from within MSK physical therapy to pain management, whether it's pelvic pain in women, which is a major condition, or chronic pain. This is important because so much of MSK work has become chronic care management, but applications like this will avoid a lot of it. These are non-invasive and more affordable options that patients will use. Overall, it's a very clear plan. Expertise in AI makes care accessible and better for everybody.

OpenAI, foundation models, and coming stages of algorithm development

Eric: You were famously one of the first outside investors into OpenAI and have had a front-row seat as the technology progressed from GPT2, to ChatGPT, to GPT4 and beyond. I’m curious to hear your assessment of foundation models overall right now, especially as we move toward greater multimodality. Are we starting to see LLMs plateau in their capabilities? This question obviously has a lot of implications for healthcare, especially as we move to pre-training models on private, proprietary healthcare data sets.

Khosla: People always look at the very short term, but generally, there’s no sign we’ve finished scaling. We are very early in the development of AI, and much more capability is to come. We are still at the Motorola StarTAC mobile phone stage, which looks good compared to landline phones, but the iPhone 1 to iPhone 13 and beyond of AI are still to come.

Let's look at the following fact: AI is training on data generated for humans on the web. That means humans can read it. But I've long argued feeding human-readable data into AI is a bad idea — or at least a limiting one. I was 17 when I started working on an ECG printer (a thermal printer) at IIT Delhi. I looked at it and considered that, in audio, we talk about frequency response. What's the frequency range? What's the total harmonic distortion? ECGs are the same because the human eye can only resolve certain things. If we collected all the data, and not just the ones humans can read, we could get a lot more information out of it.

Eric: What's an example of non-human-readable data?

Khosla: Well, take today's ECG. Humans can't perceive in fine grain enough detail what an ECG does, so we take gross measures like the interval between certain pulses and things like that.

AliveCor has an FDA submission that has been granted breakthrough designation to determine blood potassium levels to detect hyperkalemia from a $99 ECG you buy on Amazon. That's AI extracting data that a human could not.

You can predict osteoporosis from old x-rays. That comes for free because the AI can read that data. I had this argument with Kaiser probably seven years ago, because they deleted their high-resolution data. Their thinking was, from a human point of view, if they couldn't read anything more out of it, and it was too expensive to store. But I said, AI will be able to read it —it’s coming. And you'll be able to predict osteoporosis ten years in advance or bone density changes or other issues by virtue of feeding the AI these kinds of data.

Here’s another example. We invest in a company that measures continuous blood pressure in a watch form factor variable. It's approved in Switzerland and there are 50,000 users for continuous management of hypertension. But the interesting factor is you can predict stroke in advance from blood pressure dynamics. No human being could look at continuous BP data and predict stroke, but an AI could. How much expense could you avoid by having every hypertensive patient wear continuous blood pressure monitoring and avoid a stroke?

On the genomics side, we have a small company called Mirvie that can predict prenatal complications from transcriptomic data in a blood draw from the pregnant mother. That is precision medicine — and what I call change. The practice of medicine that humans evolved for good reasons can become the science of medicine.

Eric: A final question on the tech: Where do you see the algorithms going from here? What’s the direction of travel for their evolution, especially in terms of how they’ll be trained (parameter counts, attention windows, new volumes of synthetic data, etc.)? I’m inferring from your comments that you think foundation models have a lot of runway left.

Khosla: Yes, I do. The algorithms are getting better, so, the transformer model will continue to improve. Reinforcement learning is another big thing that's coming along. They call it RL or RLHF (reinforcement learning with human feedback). Now we’re starting reinforcement learning with AI feedback (RLAIF), recursively. It’s like having one large language model grade another, and do it recursively.

It is interesting to have GPT4, but more interesting to speculate on what GPT5 might look like, especially, say, when GPT5 itself trains GPT6. So, there’s a lot of progress to come. Reasoning, probabilistic thinking, and other capabilities will all emerge in the next few years and will keep improving to get to stunning levels in the next five to 10 years. The alphabet soup of terms we see like RL, RLHF, RLAIF, RAG, knowledge graphs, multimodality, and the many debates around AI approaches, are all indications of the vast innovation that is happening.

I also like this idea of training for AI. We have a company that generates curriculum for AI, not humans, to learn from. How would you write a textbook if the student was an AI and not a human? It's very different.

We are even betting on the radical idea that most internet access will be by AIs, not by humans. Agents will access the internet for tasks humans assign them, and there will be billions of agents running around 24/7. If that happens, you may see a reformulation of the whole Internet towards AI learning from it, not to human learning and AI getting work done. I hope, and believe, all of this will be guided by human agency.

I also believe that we’ll see increased specialization of AI tools. When you need to complete a complex calculation, you use a calculator application or a billing lookup table for CPT codes. The same will happen for AI, where AI will call up tools just as easily. I think the AI will have some reasoning capability, but it'll know when to call a reasoning tool or a specialized CPT codes table or diagnosis tool because it's a particularly complex or high-value problem. And if I don't want it to hallucinate, I might call a tool with a particular capability or focus.

For example, if I want to do diabetes chronic care teaching or mentoring, I can use all of the web. But I could also tell it to only source coaching from the Joslin Diabetes Manual. Then the chance of hallucinating goes down dramatically.

GenAI and Molecular Generation

Eric: Vinod, here’s one final healthcare question for you. A key area we haven’t yet explored is AI and synthetic biology. Yet another transformative GenAI breakthrough came from Google and DeepMind’s AlphaFold2, which can now predict three-dimensional structures of proteins from amino acid sequences with atomic-level precision. In other words, AI has finally solved one of the hardest problems in computational biology and chemistry — a challenge that stood for basically half a century. What are the implications here for drug development and discovery?

Khosla: I think it’s moving faster than many people may think and can get more specific and personalized. Biologics for one person! In fact, we started a company called EveryONE Medicines based off “N of 1 Medicine”. Its approach is to ask whether we can design drugs for single individuals. Regulators want people with ultra-rare diseases to have access to new therapeutics, so we are working with them to establish the right approach. The development of the drugs, and the analysis of the data to support moving forward with human testing in the individual, will rely on AI at scale. The newly approved Sickle Cell Disease treatment is just the start of a wave of therapies that will use genomic therapies. And in many cases, they will be curative, not just treating symptoms. When a treatment uses a genomic modality, drugs become more like a cellular reprogramming problem, and even more amenable to data-driven and AI approaches.

A challenge in the space is delivery, and we have several investments here since it’s now the major limiting step in the next generation of therapies. How do you safely deliver a payload of interest into the cells and tissues of interest? For example, Bionaut is using externally applied EM fields to drive tiny little bots around the body to deliver payloads into, for example, deep structures in the brain. Liberate Bio is using AI to design injectable particles to deliver genomic payloads to specific cells and tissues. Our company Cellino is using AI to design personalized cell therapies, which could include things like treatments for retinal diseases and Parkinson’s disease.

Of course, AI is also helping with the design of modalities like small molecules, and our companies like Atomwise and Charm Therapeutics have made enormous progress in designing small molecule therapies. Our company Nabla is doing the same in the design of antibody therapies. Every time we hear an update from these companies, progress is being made, and just feeds into my enthusiasm about the near future capabilities we will have to treat many conditions which have no cure today.

It's also worth noting that robotics and automation are playing a role. As our company OpenTrons and others dramatically drop the cost of doing lab work, and as we use AI to help design experiments, the cost of development will go down. Improvements using AI and automation will improve the quality and lower costs in drug manufacturing, especially for the next generation of complex biologics and genomic medicines.

We have another company, Vivodyne, which uses AI on highly multiplexed models of a range of human tissues. That is helping translate drugs applicability to humans. Especially as we transition to more specialized and human-centric medications, like genomic therapies and cell therapies, it will be increasingly important to move away from animal models whenever we can. There are, of course, also ethical considerations in animal testing, and new regulations in the U.S. and Europe to direct drug developers away from animal models when possible.

What is often neglected that the major cost of drug research and development is testing them on humans. An FDA-registered trial is an obvious example. However, now there is increasing focus on post-approval tracking being required by regulators and payers. Also, increasingly and appropriately, decisions about what medications will be reimbursed or included on formulary are coming from data, rather than a drug salesperson convincing a doctor to prescribe their particular medicine. We can think about that a few different ways. First, it may add expenses in data collection, but as we use connected devices, lowering costs of technologies like RNAseq that generate a lot of useful data, and AI tools to interpret the information, the cost to get to the answer will go down. Second, pharma currently spends nearly twice (1.7 times) as much on the sales and marketing of drugs as they do on developing them, when a lot of that money could be better spent on collecting data on how to better use approved drugs on the right patients. AI will increasingly be used to help make decisions about which patients should receive which treatments.

This potential already exists today. Scipher Medicine is already using transcriptomics patterns to predict which patients will respond to an expensive biologic like Humira, which saves the patient and payor much heartburn over administering it to non-responders.

We have not discussed in-hospital care much, but AI will play a large role there too. Infammatix can do the same in early prediction of sepsis in the hospital, just like Mirvie is using transcriptomics to predict premature birth. All three of these companies developed their diagnostic tools using what were state-of-the-art data analysis and machine learning tools to analyze genomics data. However, the tools have advanced substantially in the intervening handful of years, and companies like our Ultima Genomics are driving down the costs to collect this data. The next wave will be even more powerful.

I believe in this decade it will be economical to administer these types of transcriptomic tests to every hospital patient daily to predict what might happen to the patient that day, making suggestions about medication and care adjustments, and reducing adverse and expensive events. Going forward, this will likely be the norm.

Final reflections

Eric: Vinod, I’d like to close on a personal question. You’ve had an unmatched venture track record and an uncanny ability to accurately predict the future. Part of the reason for that, and I’m speculating here, is your command of so many disparate, interdisciplinary topics like medicine, data science, AI, synthetic biology, fusion, hypersonics, clean energy, and so on. How do you stay on top of all these disciplines?

Khosla: I think I got very lucky, and it was by accident. I didn't know all this. I was an electrical engineer at IIT, Delhi, but then I got interested in the electrical phenomena in the human body, the brain, and the heart. So I went to a professor there and proposed that we start a biomedical engineering program in Delhi with the All India Institute of Medical Sciences, which is close by. We started 50 years ago, in 1971 or 1972. Then I went to Carnegie Mellon, and I decided I’d do my master's degree in biomedical engineering. And then I went to Stanford Business School and got my MBA.

And what accidentally happened is I learned to generalize. I had a deep interest in science, where very strong basics are key. I understood physics, I understood chemistry, I understood math. But I do think the generalization has helped my learning to be more dynamic.

The advice I’d offer to anyone going through college is that they should do enough diverse things to build a foundation to learn anything new, because they don't know what their job will be in 10 or 20 years. If you look over the history of innovation, a lot of the transformational innovations have come interstitially between disciplines. And they’ve often come from those who are able to generalize from their domain expertise and comprehend in an unexpected, unconventional way in a new domain. They were willing to take the risk of failing in a domain where they had more limited knowledge.

In fact, I'll tell you a funny story. I get invited to do a lot of talks. The director of Lawrence Berkeley Lab, a premier lab in the country, had an offsite with his team and asked if I could be at the dinner. He wanted to encourage innovation. The first question I got was, "How do we increase the amount of innovation at Lawrence Berkeley labs?" To which I answered, “Swap spots.”

I recommended they put all the department heads, the head of nuclear, the head of biology, the head of chemistry into different positions. They're all accomplished people. There is no question about their IQ. But they're experts in one discipline, so they have real intellectual capability, but also have learned the conventional wisdom in their area. And nobody gets to challenge them because they're so powerful in their discipline.

I literally told them to shuffle their heads of nuclear, chemistry, and biology. If everyone is in a new field, then their capability to run on muscle memory will be muted. They’d have to learn from scratch in a new area, and they'd be much more innovative than if they stayed in their area. I totally believe that.

Getting really smart people out of their comfort zones and into new areas is what I've looked at. Time and again, it proves to be the secret to innovation. It also means that disruption usually comes from outside the ranks. In the forty years I have been doing innovation, I can find no example of large innovation that has come from somebody who was a deep expert in that field. Think of Uber, AirBnB, SpaceX, Tesla, media as in Twitter, YouTube, Netflix, Facebook, Amazon in retail, OpenAI, even biotechnology when Bob Swanson started Genentech and no pharma company embraced it early. Innovation involves out-of-the-box thinking and managed risk taking. It is possible that cancer is more likely to be solved by a physicist than a biologist!

This interview was edited by Vidal Seegobin and Abby Burns.

Don't miss out on the latest Advisory Board insights

Create your free account to access 1 resource, including the latest research and webinars.

Want access without creating an account?

You have 1 free members-only resource remaining this month.

1 free members-only resources remaining

1 free members-only resources remaining

You've reached your limit of free insights

Become a member to access all of Advisory Board's resources, events, and experts

Never miss out on the latest innovative health care content tailored to you.

Benefits include:

You've reached your limit of free insights

Become a member to access all of Advisory Board's resources, events, and experts

Never miss out on the latest innovative health care content tailored to you.

Benefits include:

This content is available through your Curated Research partnership with Advisory Board. Click on ‘view this resource’ to read the full piece

Email ask@advisory.com to learn more

Click on ‘Become a Member’ to learn about the benefits of a Full-Access partnership with Advisory Board

Never miss out on the latest innovative health care content tailored to you.

Benefits Include:

This is for members only. Learn more.

Click on ‘Become a Member’ to learn about the benefits of a Full-Access partnership with Advisory Board

Never miss out on the latest innovative health care content tailored to you.